ETL Big Data Refactoring

ETL Big Data

Refactoring

The customer

Our client is an important data company operating for 25 years in the marketing field in Italy and whose core business concerns the support of companies in the selected and qualitative acquisition of customers and partners.

The state of the art

The ETL (Extract, Transform and Load) procedures existing at the customer stored data on relational databases fed through data sources of various kinds, incrementally, updating the existing data with new information. This obsolescence caused significant problems, specifically:

- slowness of existing ETL procedures, as they are developed with technologies that are difficult to scale and parallelize;

- poor maintainability of the code, due to the technologies used now in disuse;

- high costs and difficulties in monitoring the various steps of the procedures;

- presence of bugs that generated anomalies and inconsistencies of the data and on which it was difficult to intervene;

The challenge

The customer then decided, supported by our consultants, to exploit Big Data technologies for rewriting procedures with the aim of:

- speed up execution times by solving the anomalies introduced by the procedures in use;

- apply transformations and integrate multiple data sources at each step of the computation by exploiting the existing logics;

- schedule and monitor the execution of pipelines;

- analyze the data while maintaining the software already in use in order to eliminate the training costs of the resources involved.

The solution

To meet the requirements it was therefore decided to adopt the following technologies:

- Python and Apache Spark to develop and execute data pipelines, rewriting the integration, matching and transformation logic and exploiting the parallelization and scalability offered by the framework;

- Amazon S3 and HDFS for distributed and replicated storage of data during all phases of computation (input, intermediate results and output);

- Cloud infrastructure for processing, considering that pipelines are performed at regular intervals and for a limited time and therefore an on-premise infrastructure is not required which involves much higher management costs (e.g. maintenance, unavailability or inactivity of resources, potentially variable SLA times, obsolescence);

- Apache Airflow for cloud infrastructure provisioning, scheduling and pipeline monitoring.

The benefits

By applying the above technologies, it has been possible to achieve significant increases in terms of speed in the execution of operations, data quality and infrastructure scalability and significant cost reductions.

Increased speed:

Increased speed:

- overall pipeline execution times have been reduced by 85%: steps previously performed in 3 hours now require less than 30 minutes;

- the developed pipelines operate on the entire data set, with a throughput of 1000 lines / second; the ETL procedures previously used operated on a small subset of data, with a throughput of 3 rows / second;

- the data are acquired in a few seconds: in fact, it is enough to load them on S3 to make them immediately available for computation.

Lower costs:

Lower costs:

- the use of a cloud solution, in “pay-as-you-go” mode, allows you to exploit the computing power of the cluster only when it is needed and deactivate it at the end of the computation, avoiding unwanted costs ;

- It was not necessary to train the resources that analyze the data involved, since the storage takes place in parallel also on the already existing relational databases; in fact, according to the customer’s requirements, the software for the analysis remained the same as previously used.

Improved quality and scalability:

- the data now no longer show the anomalies previously encountered: the quality of the information provided to customers has improved;

- it is now possible to monitor the various steps of the pipeline in order to identify any bottlenecks;

- thanks to the flexibility of cloud solutions, it would be easy to scale and optimize the infrastructure used, should it prove necessary to increase resources.

Marketing Automation

Marketing

Automation

The customer

The client company, a company operating in the energy market, has been developing a marketing campaign aimed at acquiring new customers for more than 2 years through the Google Ads and Facebook Ads platforms.

The problem

The marketing campaigns launched by the aforementioned company suffer from the lack of an analysis of the results, so it is difficult to evaluate their effectiveness.

The solution

The solution provided has a dual objective, namely to provide the client company with detailed reports on the impact of the major reference metrics in the Social Marketing field in relation to the campaigns already carried out and to equip the company with powerful forecasting tools in view of the new campaigns to be start in order to correctly target their promotional activities.

Planning and Implementation

The project implemented by Koros Consulting required access as an Analyst to the Google and Facebook Ads accounts of the client company, so as to be able to carry out a data mining activity followed by a data cleaning activity using tools such as Office and Power BI.

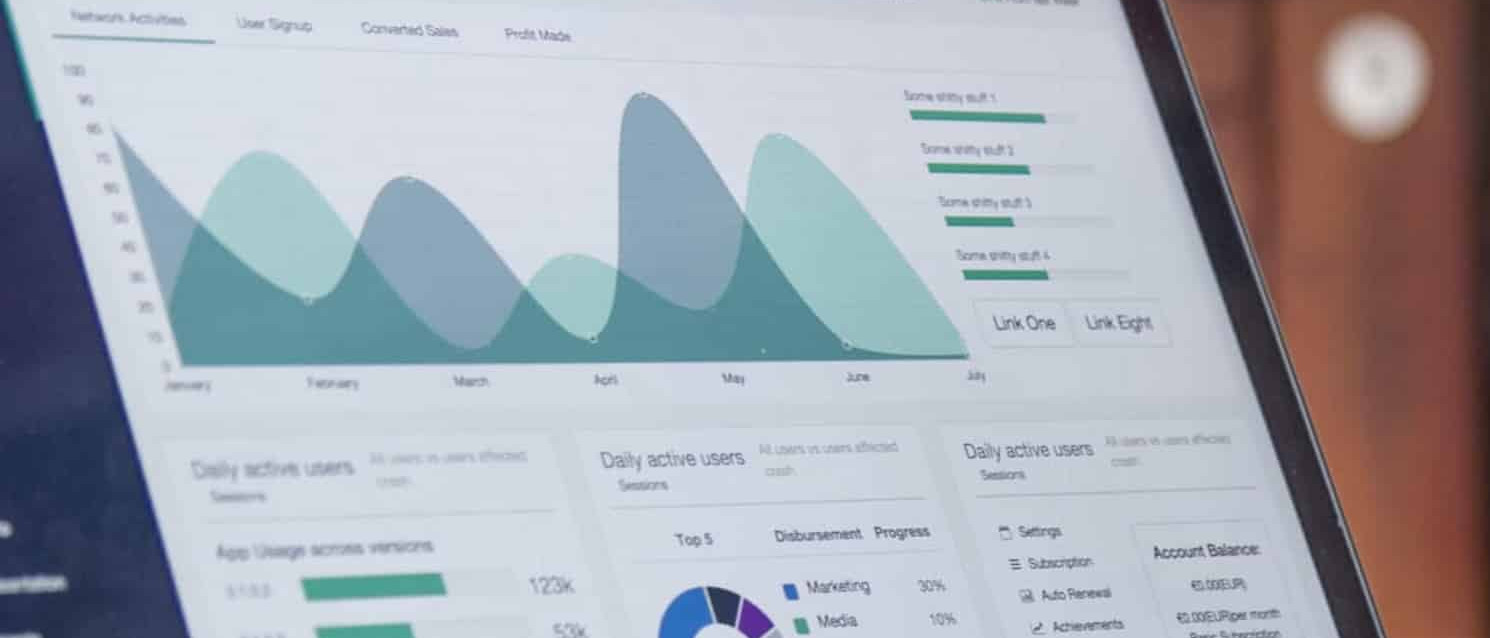

At a later stage, Power BI reports were created in the form of dashboards concerning the analysis of the aforementioned data relating to the time interval from 30/01/2017 to 25/10/2019.

The last phase involved the goal of designing machine learning algorithms using libraries developed ad-hoc in Python language to be implemented on a web interface using the Flask microframework for the backend and the Vuejs framework for the frontend.

Google Ads Analysis

In order to evaluate the implementation of the customer assistance service, Koros Consulting has analyzed the data relating to the telephone call log resulting from the results of the Google Ads campaigns in the period from 06/10/2017 to 04/10/2019 . In addition, the main Social Media Marketing metrics were analyzed to plan future marketing campaigns and establish the most suitable content to increase engagement, including: impressions, coverage, CTR, cost per conversion. The goal was to pay attention not only to the content, but also the time and date of publication on Google, in order to become aware of the variables that affect user feedback.

Facebook Insights Analysis

Finally, following a data mining and data cleaning activity aimed at evaluating the effectiveness of the posts published on Facebook, an analysis was carried out, through the Power BI software, of the data from the posts published on Facebook in the interval temporal from 13/10/2018 to 07/10/219, managing to obtain important information about times, days of the week, months and target people in which the posts were most effective in terms of impressions, engagement rate, coverage, results, clicks and other metrics as well as the results achieved by each type of post published, as well as possible future trends.

Facebook Ads Analysis

After carrying out an analysis of the posts in general, Koros Consulting focused on a specific analysis of the sponsored posts. Tracing the activities of the previous point, he was able to obtain detailed information on an hourly, daily and monthly basis of the main performance metrics, including: coverage, impressions, results, amount spent, cost per result and conversion rate. In this way it was possible to provide the client company with useful considerations to better target the next marketing strategies via Facebook.

Support application

The final phase of the project involved the creation of an application to support business decisions in the marketing field. After having developed and trained a machine learning algorithm, we proceeded to its web implementation through the Flask framework.

Car Rental Real Time Analytics

Car Rental

Real Time Analytics

The customer

Our client is a leading company in the car rental sector that operates throughout Italy and Eastern Europe, targeting business and private customers.

The state of the art

Over the years, the company has entered into agreements with different brokers for the management of quotes and car reservations. Specifically, the data on quotes, which do not always translate into reservations, are stored on a relational database. The company therefore expressed the following critical issues:

- The data remained unused and had duplicate records;

- It was difficult to integrate data from different sources;

- Monitoring and visualization would have required long development times and high costs if made with the technologies used by the company.

The challenge

The company therefore turned to Koros Consulting to develop an integrated solution for real-time data analysis through the creation of a pipeline and a dashboard that would allow it to monitor the performance of some KPIs. These are the needs:

- analyze in real time the progress of estimates;

- disambiguate duplicate quotes;

- compare quotes with actual bookings;

- view the data through dedicated dashboards;

- carry out historical analyzes.

The solution

To meet the requirements, it was therefore decided to build a real-time data pipeline using the following technologies:

- Confluent with which, through Kafka Connect, the data are taken in real time from the source databases, then they are disambigued and aggregated;

- RethinkDB, NoSQL database where Confluent reprocessed data is stored for persistence. The chosen database guarantees efficiency for real-time applications and is natively distributable and scalable;

- Python Dash for the development of dashboards that allow analysts to view, in self-service mode and in real time, the performance of identified KPIs. It is also possible to carry out, as required, customized historical analyzes using filters and export the data for subsequent analysis using spreadsheets;

- Docker for on-premise architecture development.

The benefits

The solution created in light of the customer's needs has made it possible to obtain not only performance improvements but above all a precious source of forecasts to support corporate decision-making processes.

Speed

In a few seconds, after their storage on the different sources, the data are integrated and made available. The use of Docker has also made it possible to significantly reduce the hardware and software infrastructure configuration times.

Integrity

Thanks to the elimination of duplicates, the starting dataset was made consistent and the anomalies contained therein were removed.

Insights

Using the data visualization tools, it is possible to monitor KPIs in real time and extract information from the data to make “data-driven” decisions.